Normally there are many common steps to developing LLMs:

Building an LLM

Pre-training for foundation model

Fine-tuning for foundation model

More specialisation

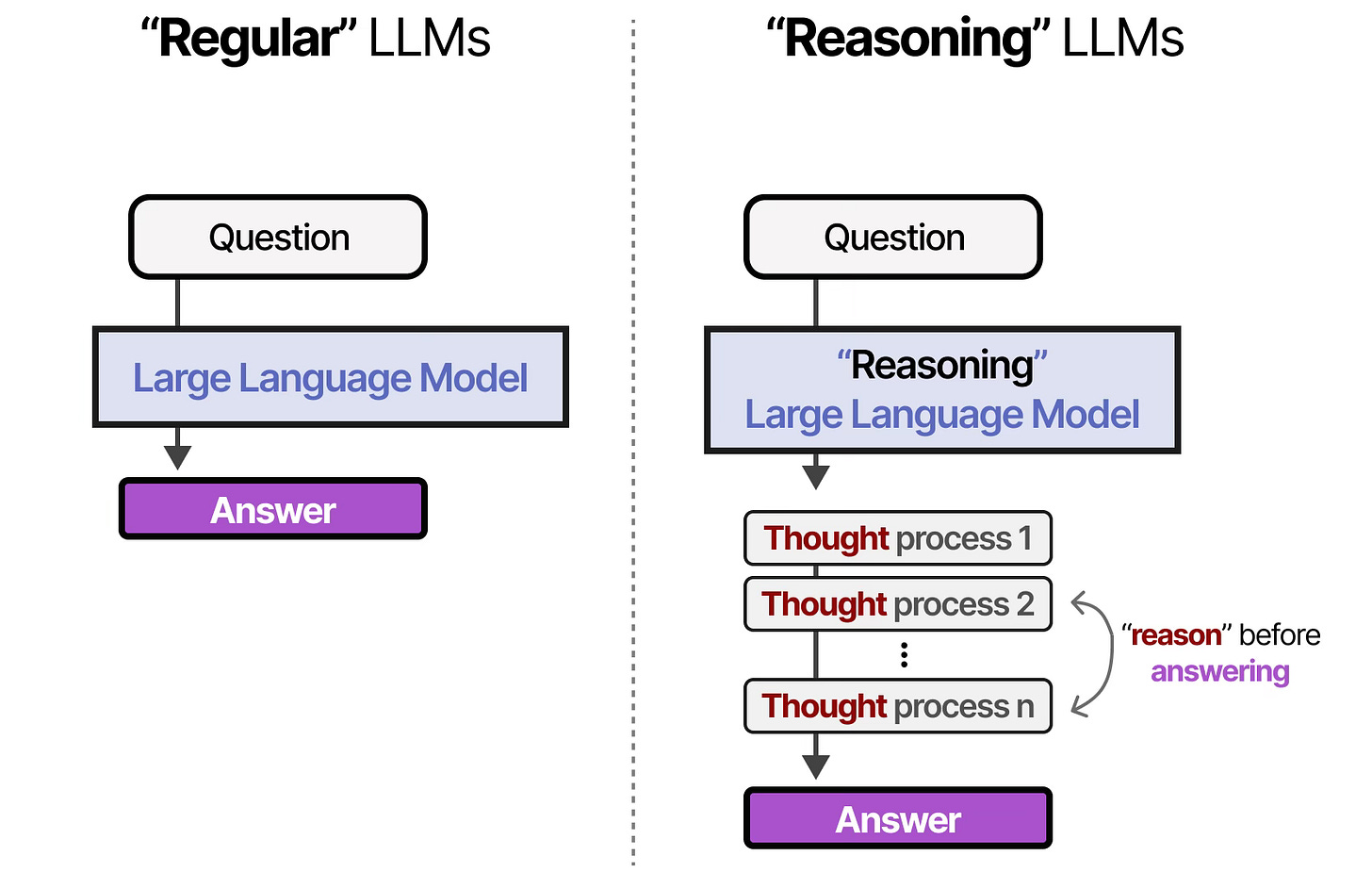

How to define reasoning model?

I believe that LLMs should generate intermediate steps (sometimes called a “chain of thought”) that reveal how they arrive at their finals responses, instead of directly outputting an answer based solely on statistical patterns. There are two potential benefits:

The model’s output more becomes transparent, allowing you can see the entire process of “thinking“

It generates more related context for the model to perform self-correction and verification, which can help reduce errors and “hallucinations“ in the generated content.

When should we use reasoning model?

Reasoning models as a subset of large language models (LLMs), are designed to handle complex tasks that require multi-step logical thinking. So, it should be used when tackling complex tasks that require multi-step problem-solving or deep understanding, such as advanced mathematics, legal analysis, or strategic planning -since their ability to generate intermediate reasoning steps not only enhances accuracy and error detection but also provides valuable insights into how the final answer was derived.

Complex mathematical puzzles where each intermediate step matters.

Debug or optimize intricate code by tracing logical execution and correcting errors

Analyse legal cases that demand nuanced interpretation and sequential application of rules

Support scientific research by generating and testing hypotheses in a structured, step-by-step manner.

How to build and improve reasoning models?

One straightforward approach to let model “think“ longer. We have discussed “inference-time scaling” in article Understanding Reasoning Large Language Models. So there are many approaches:

Inference-time scaling

The term here refers to increasing computational resources during inference to improve output quality.

The Chain-of-thought(CoT) Prompting

Chain-of_thought (CoT) prompting is an approach where a language model is explicitly encouraged to “think aloud” by generating intermediate reasoning steps before arriving at a final answers. By incorporating cues such as “let’s think step by step“ in the prompt, the model break down complex problems into manageable parts, enhancing both the accuracy and transparency of its problem-solving process.

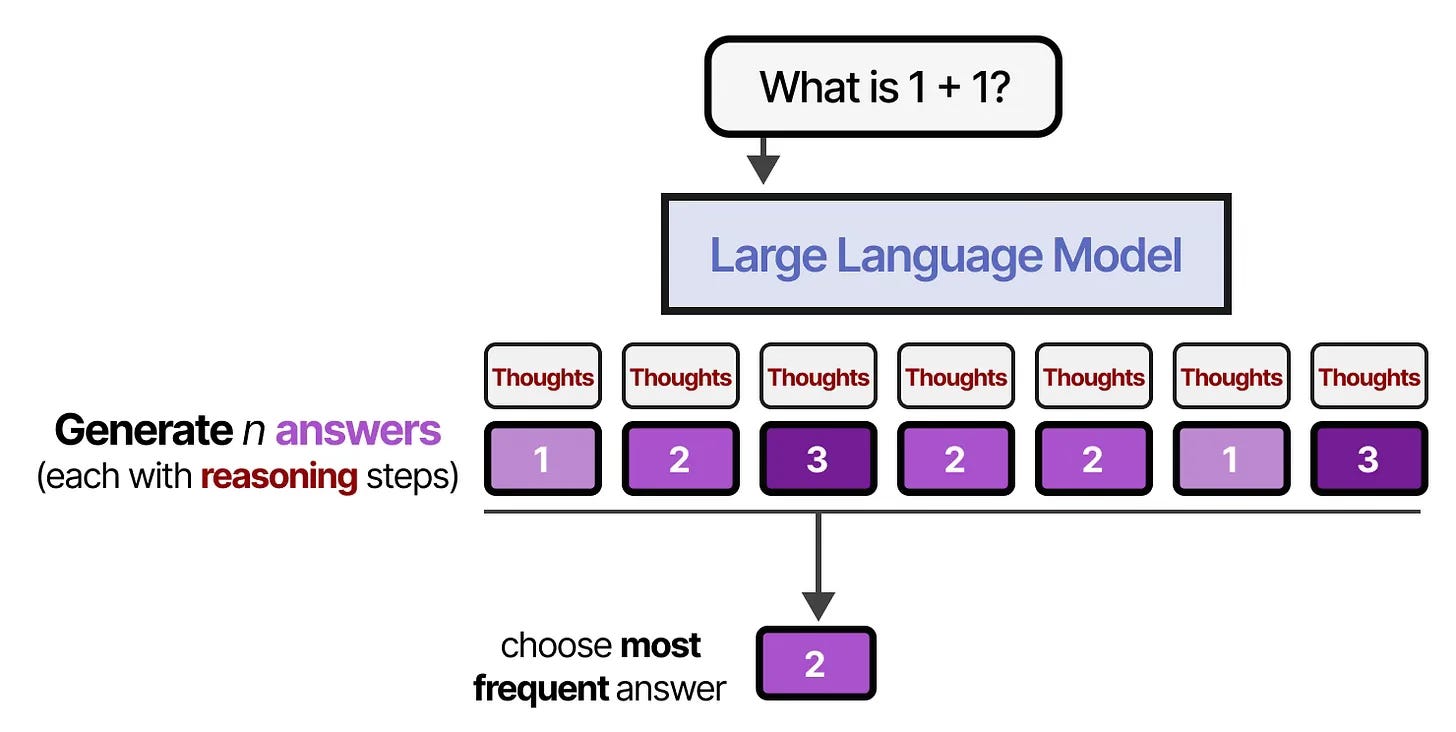

Majority Voting

It is an ensemble technique that enhances a reasoning model’s performance by generating multiple independent outputs for the same prompt and then selecting the answer that occurs most frequently. This approach leverages the diversity of the model’s reasoning paths, reducing the likelihood that a single mistake will dominate the final response.

Search Against a Verifiers

Please check the Strategies for test-time compute scaling section at Understanding Reasoning in Large Language Models for more details.

There are many methods that involves a verifier like:

Best-of-N samples

Beam Search

Lookahead Search

Acknowledgment

Thanks for the excellent articles below. Really appreciate them. All the pictures come from these articles.

https://www.linkedin.com/pulse/understanding-reasoning-llms-sebastian-raschka-phd-1tshc/

https://www.linkedin.com/pulse/understanding-reasoning-large-language-models-bowen-li-kgadc/?trackingId=2PQWrTjXS7Ks6Nhl3ZF%2FCQ%3D%3D

https://www.vox.com/future-perfect/372843/openai-chagpt-o1-strawberry-dual-use-technology

https://www.aiwire.net/2025/02/05/what-are-reasoning-models-and-why-you-should-care/?utm_source=chatgpt.com