Note: This article is an experiment in learning about LLMs from YouTube, testing speech recognition via LLM, and exploring SubStack’s podcast feature. There is no intent for commentary. Please refer to the original YouTube video for full context.

Recently, I watched Andrej Karpathy’s video, Deep Dive into LLMs like ChatGPT, and found it incredibly insightful for understanding LLMs better.

However, watching it just once wasn’t enough. To reinforce my learning, I thought of extracting specific useful chapters and converting them into a podcast. This way, I could easily revisit key parts through repeated listening.

So, here we are downloading it from YouTube with chapter information.

[

{

"start_time": 0,

"title": "introduction",

"end_time": 60

},

{

"start_time": 60,

"title": "pretraining data (internet)",

"end_time": 467

},

{

"start_time": 467,

"title": "tokenization",

"end_time": 867

}

]Speech recognition via Whisper

The second step is getting the transcript. I’m going to use two T4 GPUs to run the large version of open-whisper. The code see below section.

And here we got the transcript

Okay now before we plug text into neural networks we have to decide how ... So, yeah, we're going to come back to tokenization, but that's for now.The Key Point of Tokenisation Part of the Video

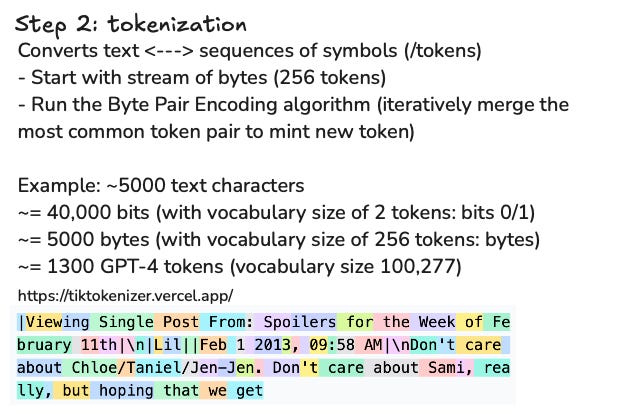

I'm thrilled about this section, as it offers an opportunity to deepen my understanding. In this chapter, the author delves into the process of tokenization in neural networks:

Text representation for Neural Networks

Neural networks require input as a one-dimensional sequence of symbols form a finite set.

The challenge lies in determining the appropriate symbols and converting text into this required format.

Binary representation and Sequence Length

Text is inherently a one-dimensional sequence, typically read from left to right and top to bottom.

Computers represent this text in binary form (bits: 0s and 1s).

While using bits provides a simple representation with only two symbols, it results in extremely long sequences, which are inefficient for neural networks.

Grouping Bits into Bytes(Most important part in my opinion)

To reduce sequence length, bits are grouped into bytes (8 bits each), allowing for 256 possible combinations (0 to 255)

This approach shortens the sequence length but increases the number of unique symbols to 256.

Byte Pair Encoding (BPE)

To further optimize, Byte Pair Encoding is employed.

BPE identifies frequently occurring pairs of bytes and merges them into new symbols, iteratively expanding the vocabulary while softening the sequence.

Vocabulary Size in Practice

In practical applications, models like GPT-4 utilise a vocabulary of approximately 100,277 symbols.

This specific vocabulary size is chosen to optimize the balance between sequence length and the granularity of representation.

Tokenization Process

Converting raw text into these defined symbols is known as tokenization

Tools like Tiktokenizer can be used to explore how text is tokenised under models like GPT-4

For example, the phrase “Hello, world“ might be tokenised into distinct tokens representing “Hello” and “ world“.

Conclusion(Fake)

I plan to publish a series of articles analyzing this video, as it introduces numerous essential concepts and provides clear explanations. Additionally, I will explore Retrieval-Augmented Generation (RAG) in the context of video analysis. Stay tuned!

The other chapters:

Code on Kaggle

References

Check K8SUG Newsletter

Welcome to check K8SUG newsletter which with the latest Kubernetes, AI, and Cloud-Native news!

Share this post